|

I am currently a first-year Ph.D. student at the School of Computing, National University of Singapore, under the supervision of Prof. Lin Shao. My research interests primarily focus on robotic manipulation and 3D perception. At the same time, I actively collaborate with industry partners, conducting research on robotic manipulation at Tsinghua AIRIC. Previously, I interned at research institutes such as Tsinghua AIR, and Beihang Robotics Institute, and startups such as EncoSmart, and LightIllusions, where I gained extensive experience in mechanical structure design and computer vision research. I am also an active member of AnySyn3D, organized by Prof. Xiaoxiao Long, a non-profit research interest group comprising individuals with a strong interest in exploring research problems and cutting-edge technologies in any topics of 3D. Email / CV / Google Scholar / GitHub / |

|

|

My long-term research goal is to build agents that efficiently understand the physical world. These agents will then apply their knowledge to interact with the physical world, fostering adaptability and enabling continuous skill acquisition. So I am up for anything related research and currently focusing on {3D Vision, Robotic Manipulation} for Embodied AI:

|

|

|

|

Yuhang Zheng, Xiangyu Chen, Yupeng Zheng, Songen Gu, Runyi Yang, Bu Jin, Pengfei Li, Chengliang Zhong, Zengmao Wang, Lina Liu, Chao Yang, Dawei Wang, Zhen Chen, Xiaoxiao Long†, Meiqing Wang†. RA-L, 2024 [Homepage] [arXiv] [Code] We introduced GaussianGrasper, a robot grasping system implemented by a 3D Gaussian field endowed with open-vocabulary semantics and accurate geometry that is capable of rapid updates to support open-world robotic grasping guided by language. |

|

Xiaoxiao Long*, Yuhang Zheng*, Yupeng Zheng, Beiwen Tian, Cheng Lin, Lingjie Liu, Zhao Hao†, Guyue Zhou, Wenping Wang†. TPAMI, 2024 [Homepage] [arXiv] [Code] We presented a simple but effective Adaptive Surface Normal (ASN) constraint to capture reliable geometric context, utilized to jointly estimate depth and surface normal with high quality. |

|

Shengjie Xiao, Yongqi Shi, Zemin Wang, Zhe Ni, Yuhang Zheng, Huichao Deng†, Xilun Ding Frontiers of Mechanical Engineering , 2024 [Paper] We presented a new lift system with high lift and aerodynamic efficiency, which effectively utilizing the high lift mechanism of hummingbirds to help improve aerodynamic performance. |

|

Shaocong Xu, Xiaoxue Chen, Yuhang Zheng, Guyue Zhou, Yurong Chen, Hongbin Zha, Hao Zhao† Image and Vision Computing, 2024 [arXiv] [Code] We designed (1) a two-stage transformer-based network to bridge the relationship between the fine-grained edges (reflectance, illumination, depth and normal) and generic edge detection tasks and (2) a cause-aware decoder, modeling edge cause as four learnable tokens. |

|

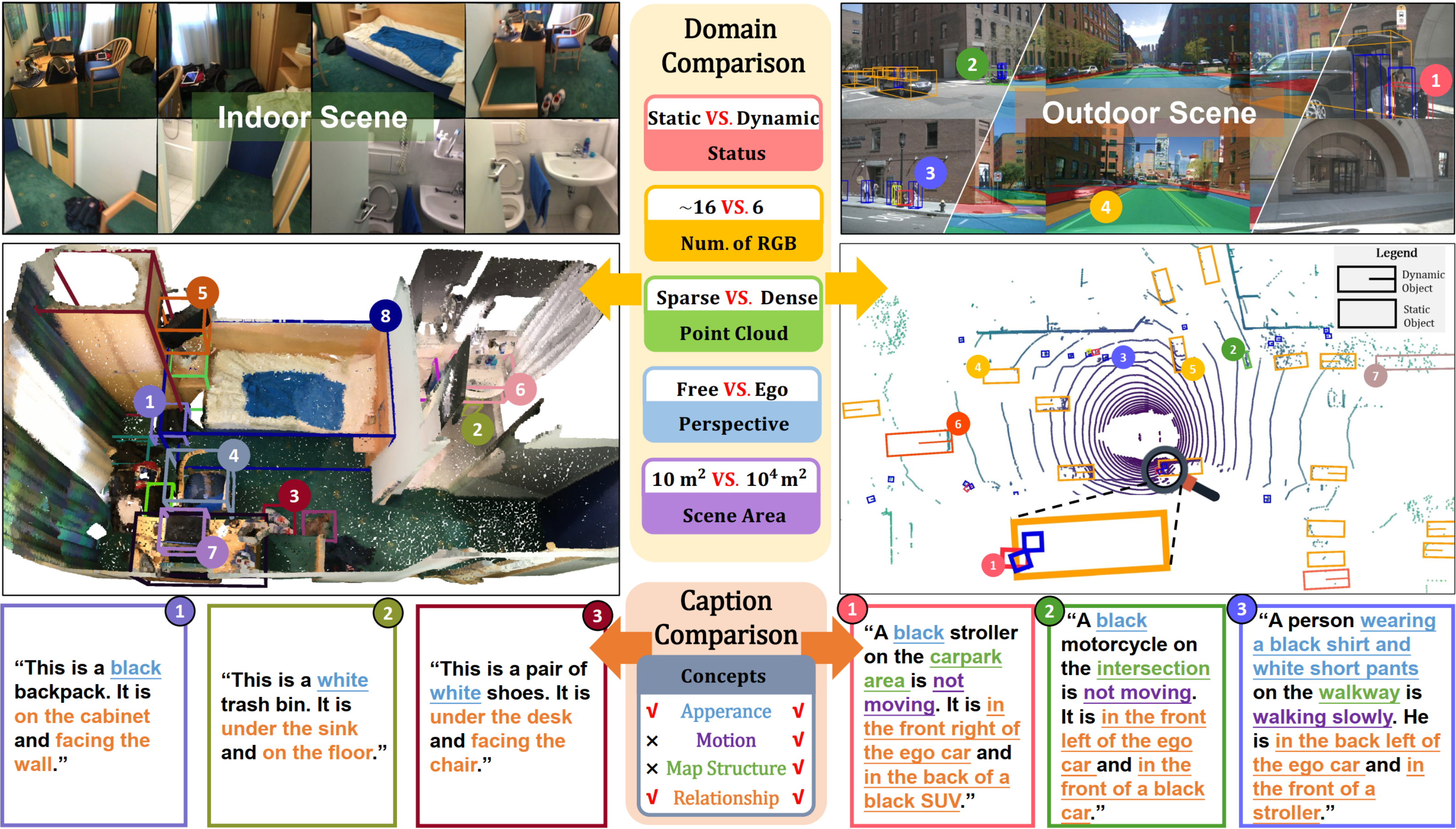

Bu Jin, Yupeng Zheng†, Pengfei Li, Weize Li, Yuhang Zheng, Sujie Hu, Xinyu Liu, Jinwei Zhu, Zhijie Yan, Haiyang Sun, Kun Zhan, Peng Jia, Xiaoxiao Long, Yilun Chen, Hao Zhao ECCV, 2024 [Homepage] [arXiv] [Code] We introduced the new task of outdoor 3D dense captioning with TOD3Cap dataset; We proposed TOD3Cap network, leveraging the BEV representation to encode sparse outdoor scenes, and combine Relation Q-Former with LLaMA-Adapter to dense captioning in the open-world. |

|

Yupeng Zheng*, Xiang Li*, Pengfei Li, Yuhang Zheng, Bu Jin, Chengliang Zhong, Xiaoxiao Long, Hao Zhao, Qichao Zhang† ICRA, 2024 [arXiv] [Code] We presented MonoOcc, a high-performance and efficient framework for monocular semantic occupancy prediction. We (1) propose an auxiliary semantic loss as supervision and an image-conditioned cross-attention module to refine voxel feature, and (2) employ a distillation module to transfer richer knowledge. |

|

Chengliang Zhong, Yuhang Zheng, Yupeng Zheng, Hao Zhao†, Li Yi, Xiaodong Mu, Ling Wang, Pengfei Li, Guyue Zhou, Chao Yang, Xinliang Zhang, Jian Zhao ICCV, 2023, Oral presentation [arXiv] [Code] We presented 3D Implicit Transporter, a self-supervised method to discover temporally correspondent 3D keypoints from point cloud sequences. Extensive evaluations show that our keypoints are temporally consistent for both rigid and nonrigid object categories. |

|

Zhijie Yan, Pengfei Li, Zheng Fu, Shaocong Xu, Yongliang Shi, Xiaoxue Chen, Yuhang Zheng, Yang Li, Tianyu Liu, Chuxuan Li, Nairui Luo, Xu Gao, Yilun Chen, Zuoxu Wang, Yifeng Shi, Pengfei Huang, Zhengxiao Han, Jirui Yuan, Jiangtao Gong, Guyue Zhou, Hang Zhao, Hao Zhao† ICCV, 2023 [Homepage] [Dataset] [Code] We presented a new interactive trajectory prediction dataset named INT2, which is short for INTeractive trajectory prediction at INTersections with high quality, large scale and rich information. |

|

Yuhang Zheng*, Qiyao Wang*, Chengliang Zhong†, He Liang, Zhengxiao Han, Yupeng Zheng† CICAI, 2023 🏆Best demo award [Paper] We developed an intelligent desktop operating robot designed to assist humans in their daily lives by comprehending natural language with large language models and performing a variety of desktop-related tasks. |

|

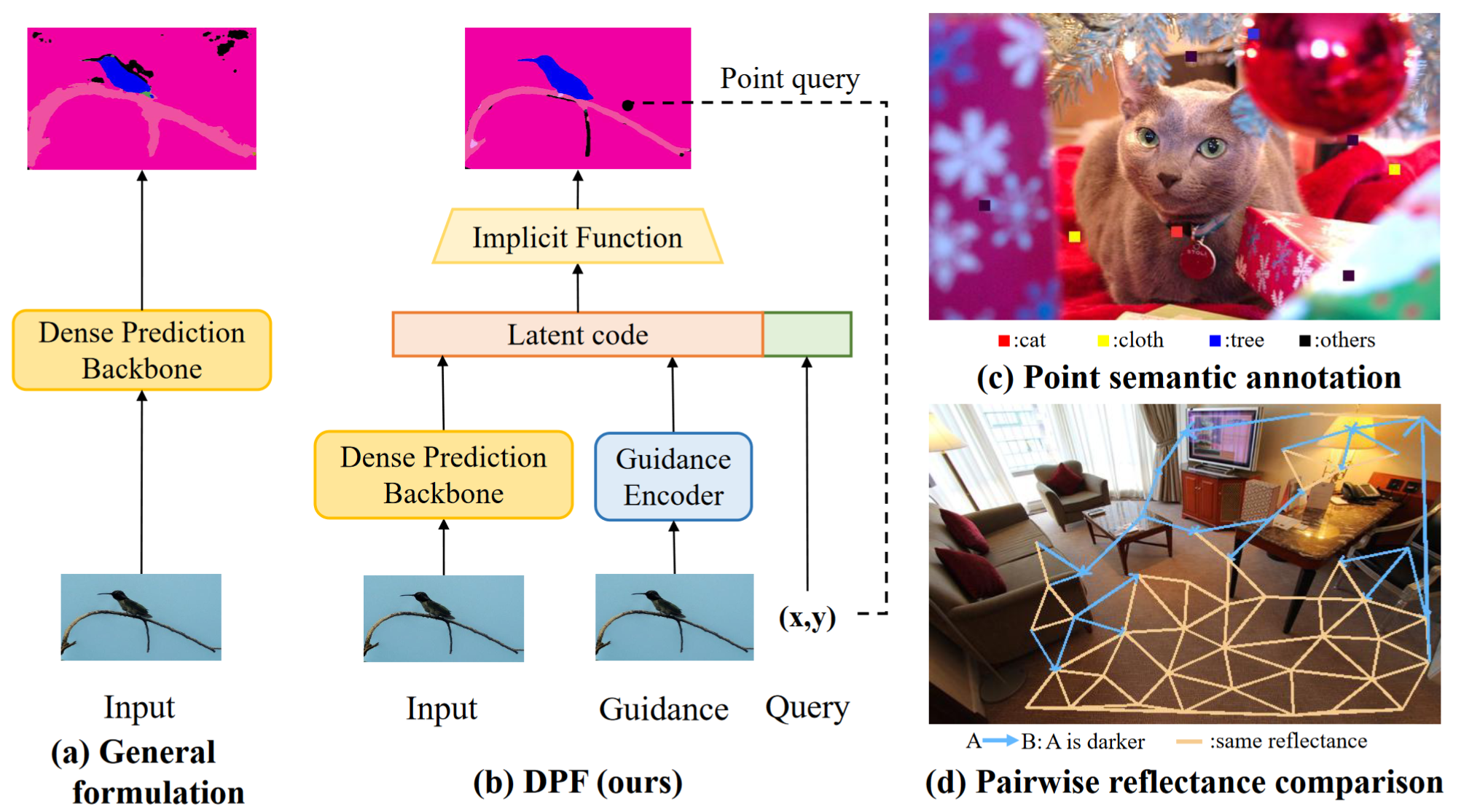

Xiaoxue Chen, Yuhang Zheng, Yupeng Zheng, Qiang Zhou, Hao Zhao†, Guyue Zhou, Ya-Qin Zhang CVPR, 2023 [arXiv] [Code] We proposed dense prediction fields (DPFs), a new paradigm that makes dense value predictions for point coordinate queries. An implicit neural function is used to model the DPFs, which are compatible with point-level supervision. |

|

Bu Jin, Xinyu Liu, Yupeng Zheng, Pengfei Li, Hao Zhao†, Tong Zhang, Yuhang Zheng, Guyue Zhou, Jingjing Liu ICRA, 2023 [arXiv] [Code] We presented Adapt (Action-aware Driving cAPtion Transformer), a new end-to-end transformer-based framework for generating action narration and reasoning for self-driving vehicle. |

|

Yupeng Zheng, Chengliang Zhong, Pengfei Li, Huan-ang Gao, Yuhang Zheng, Bu Jin, Ling Wang, Hao Zhao, Guyue Zhou, Qichao Zhang, Dongbin Zhao† ICRA, 2023 [arXiv] [Code] We presented STEPS, the first method that jointly learns a nighttime image enhancer and a depth estimator with a self-supervised manner. And a newly proposed uncertain pixel masking strategy is used to tightly entangle these two task. |

|

|

|

Meiqing Wang, Yuhang Zheng, Zijian Wu, Chenhao Ye, Hao Luo Chinese Invention Patent, Substantive Examination. CN117102738A, 2023 Meiqing Wang, Yuhang Zheng, Zijian Wu, Hao Luo, Chenhao Ye Chinese Invention Patent, Substantive Examination. CN117133010A, 2023 Meiqing Wang, Yuhang Zheng, Jinjian Duan Chinese Invention Patent, Substantive Examination. CN115577709A, 2023 Huichao Deng, Zhe Ni, Zemin Wang, Yuhang Zheng, Yongqi Shi, Shutong Zhang Chinese Invention Patent, Patent Grant. CN113148145B, 2022 Huichao Deng, Yongqi Shi, Yuhang Zheng, Zemin Wang, Zhe Ni, Shutong Zhang Chinese Invention Patent, Patent Grant. CN113148146B,, 2022 |

|

Outside of research, I enjoy playing basketball🏀, swimming🏊 and traveling🚢. |

|

Website template from Jon Barron. |